Course Content

- Intro (0:45)

- The RNN Encoder-Decoder vs Attention mechanism (4:08)

- The Attention Layer (6:25)

- The Bahdanau Attention (4:40)

- The Luong Attention (3:13)

- Implementing in PyTorch (2:18)

- Implementing the Bahdanau attention (9:32)

- Implementing the Luong attention (7:42)

- Implementing the Decoder (10:51)

- Putting everything together (2:32)

- Outro (0:37)

- Intro (1:01)

- The Overall Architecture (5:10)

- The Position Embedding (13:07)

- The Encoder (5:23)

- The Decoder (6:36)

- Implementing the Position Embedding (4:16)

- Implementing the Position-Wise Feed-Forward Network (1:54)

- Implementing the Encoder Block (2:44)

- Implementing the Encoder (2:58)

- Implementing the Decoder Block (3:56)

- Implementing The Decoder (3:12)

- Implementing the Transformer (2:35)

- Testing the code (8:05)

- Outro (0:48)

Damien Benveniste, PhD

Requirements

- Intermediate knowledge of Python programming

- Knowledge of PyTorch can be helpful

- Some knowledge of Machine Learning can be helpful

Description

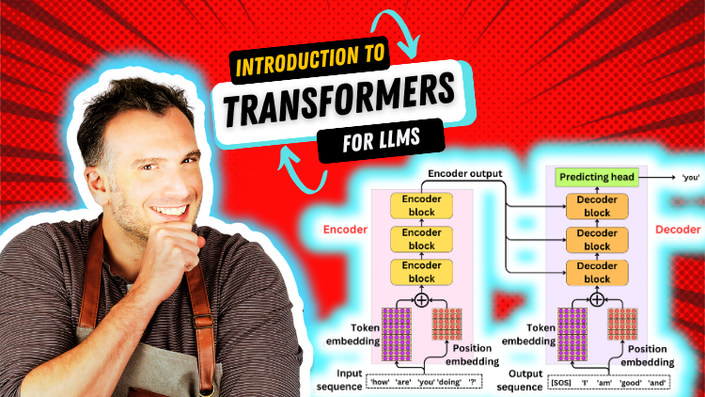

Welcome to the Introduction to Transformers for Large Language Models. Very recently, we saw a revolution with the advent of Large Language Models. It is rare that something changes the world of Machine Learning that much, and the hype around LLM is real! That's something that very few experts predicted, and it's essential to be prepared for the future.

This course is for Machine Learning enthusiasts who want to understand the inner workings of Transformer architecture. We are going to explore the different models that led to that discovery back in 2017. From the RNN Encoder-Decoder architecture, passing by the Bahdanau and Luong Attention mechanisms, up to the self-attention mechanism. We are going to dive into the strategy to parse text into tokens before feeding them to the LLMs and how LLMs can be tuned to generate text.

Each section will divided into the conceptual part and the coding part. I recommend digging into both aspects, but feel free to focus on the concepts or the coding if it matters more to you. I made sure to separate the two for learning flexibility. In the coding part, we are going to see how the different models are implemented in PyTorch, and we are going to explore some of the capabilities of the Transformers Python package by Hugging Face. However, this is not a PyTorch course, and I will not dive into the details of the framework.

Topics covered in that course:

- The RNN Encoder-Decoder Architecture

- The Attention Mechanism Before Transformers

- The Self-Attention Mechanism

- Understanding the Transformer Architecture

- How do we create Tokens from Words

- How LLMs Generate Text

- Transformers' applications beyond LLMs

Who this course is for:

- Machine Learning enthusiasts who want to improve their knowledge of Large Language Models

- Intermediate Python developers curious to learn the ins and outs of the Transformer architecture