The RNN Encoder-Decoder Architecture: Concepts

The RNN encoder-decoder architecture was developed by Yoshua Bengio, Dzmitry Bahdanau, Kyunghyun Cho, and additional contributors.

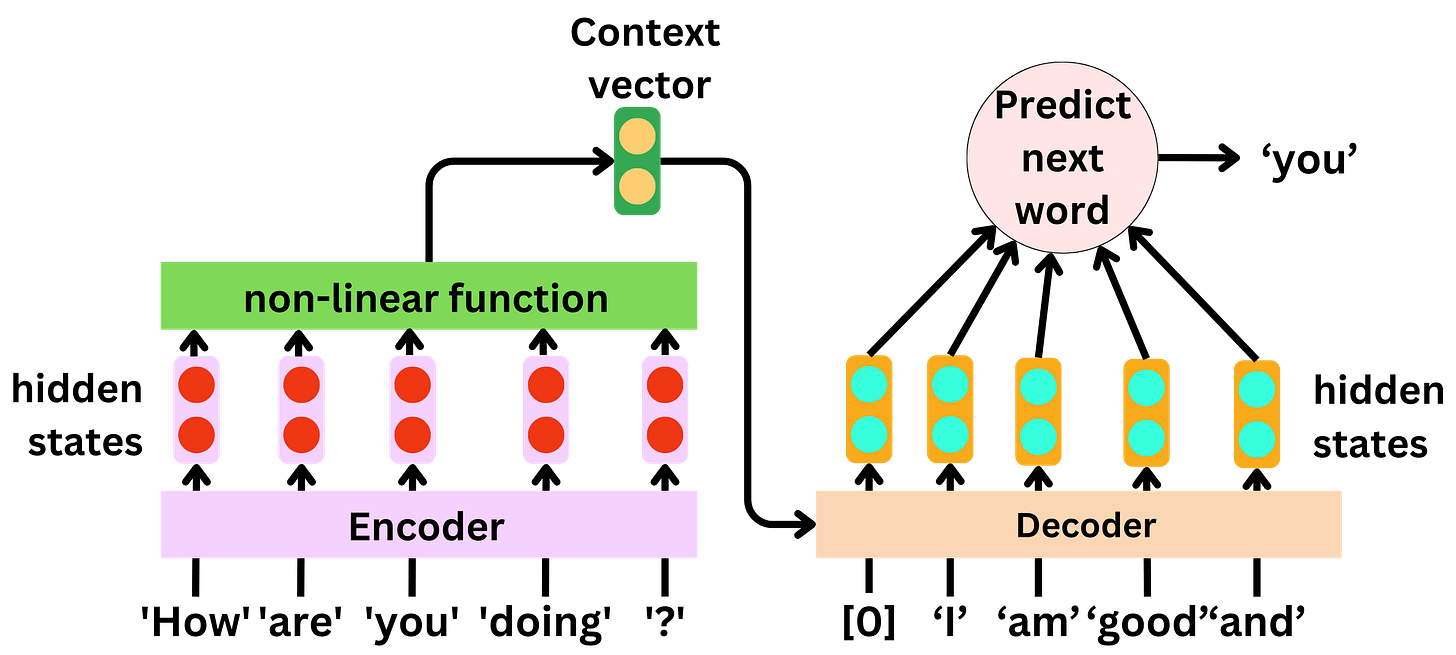

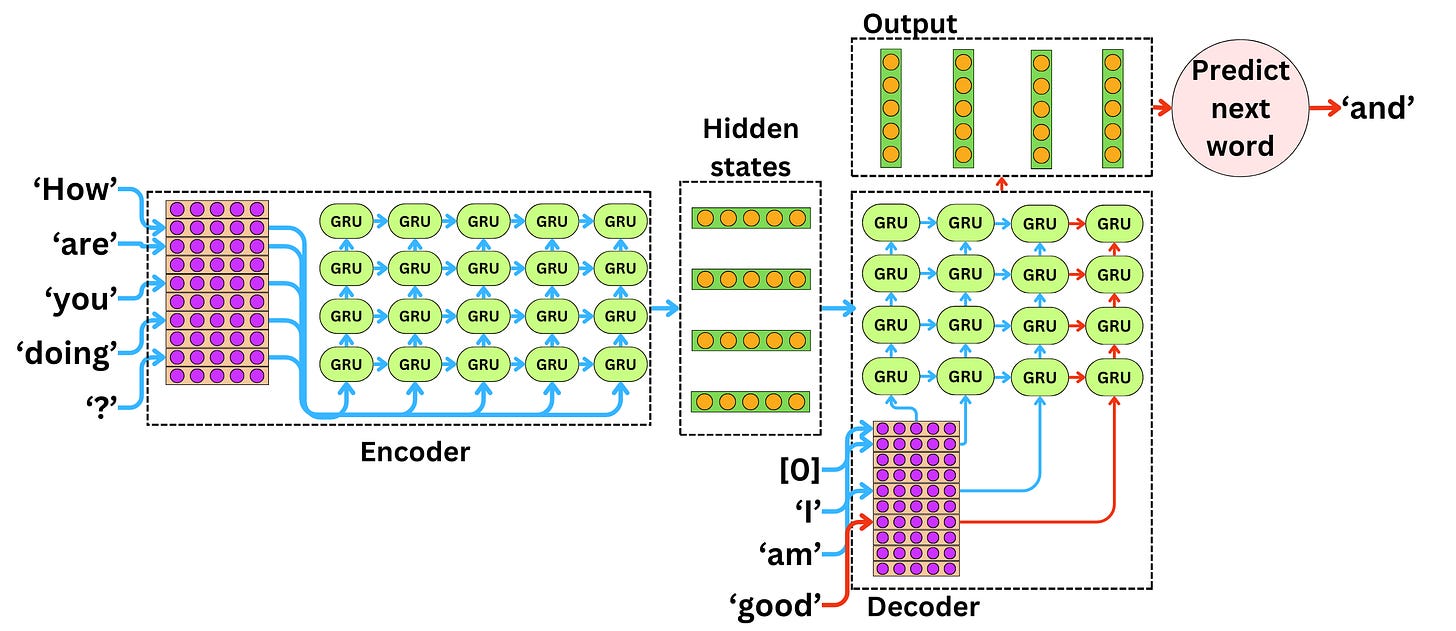

The RNN encoder-decoder architecture uses the input sentence and predicts the next word in an iterative fashion:

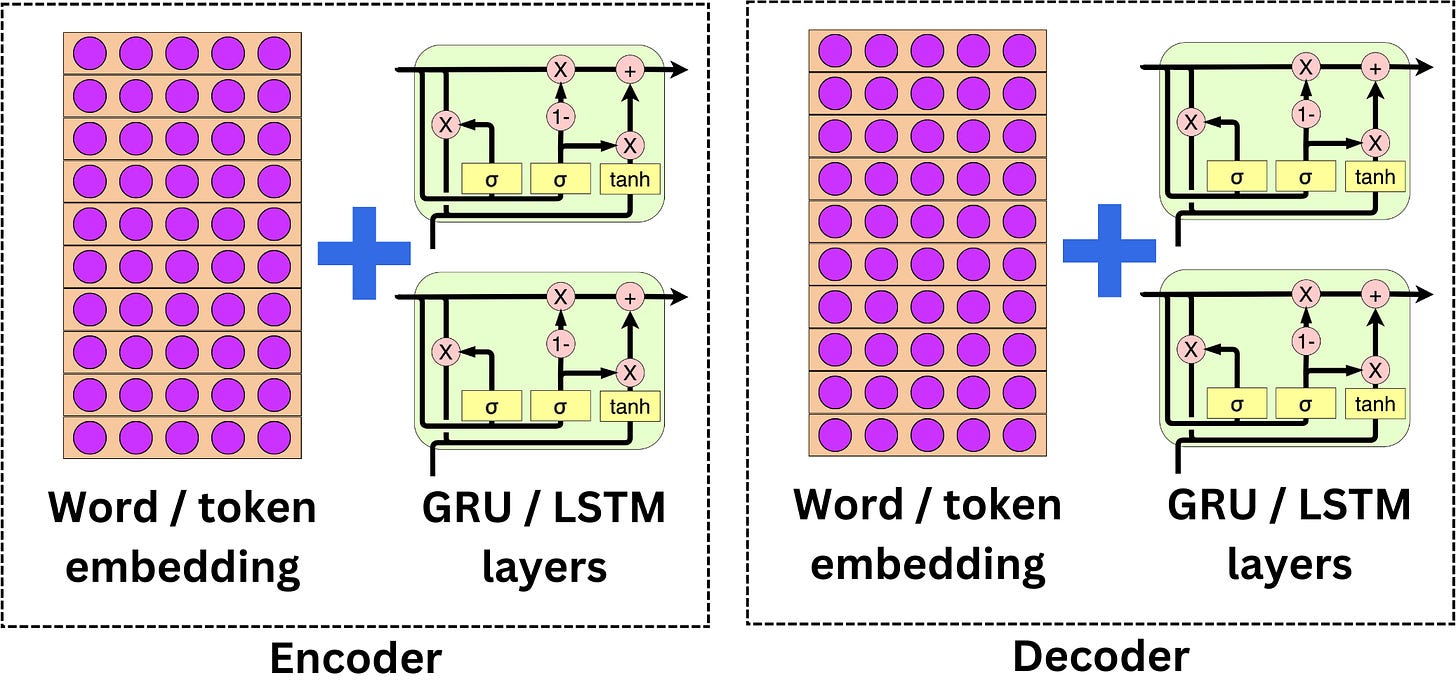

Both the encoder and the decoder are using word embedding and recurring units.

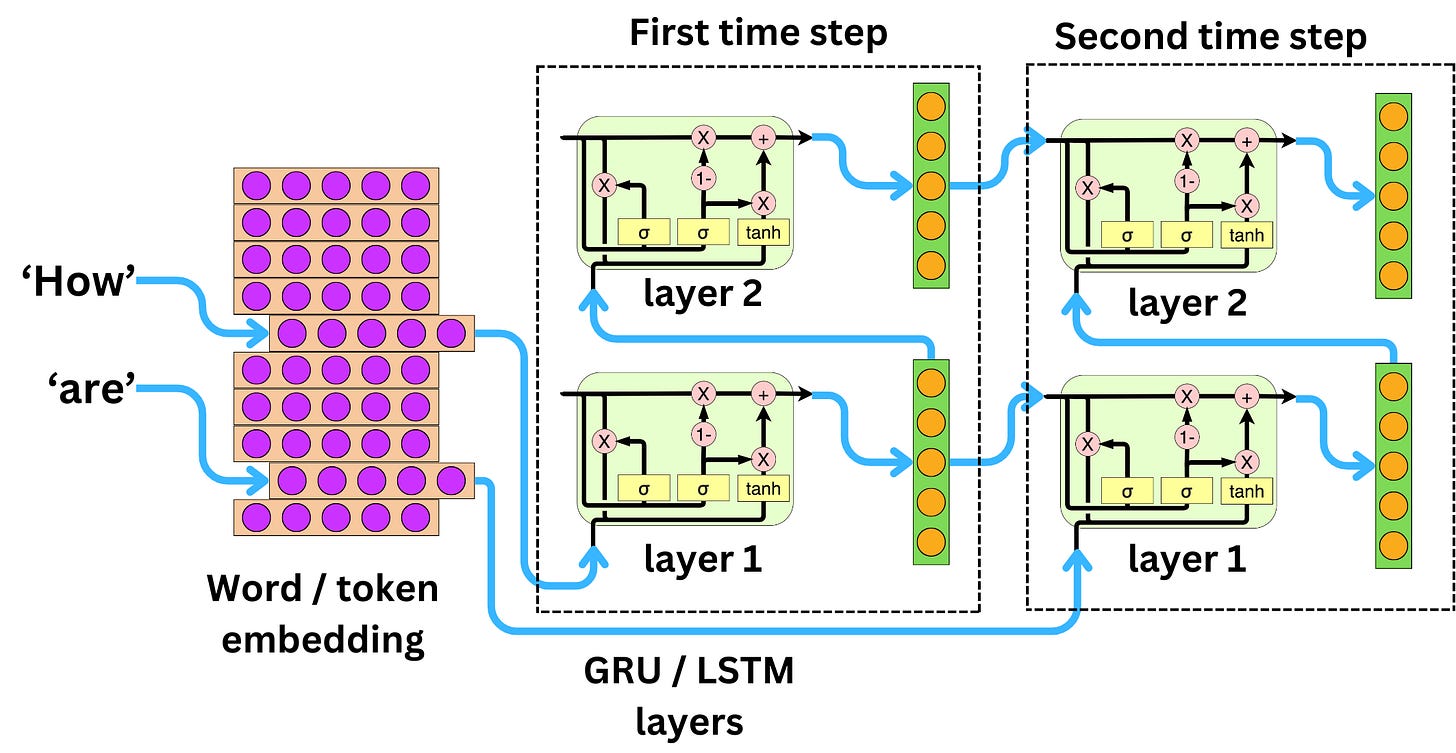

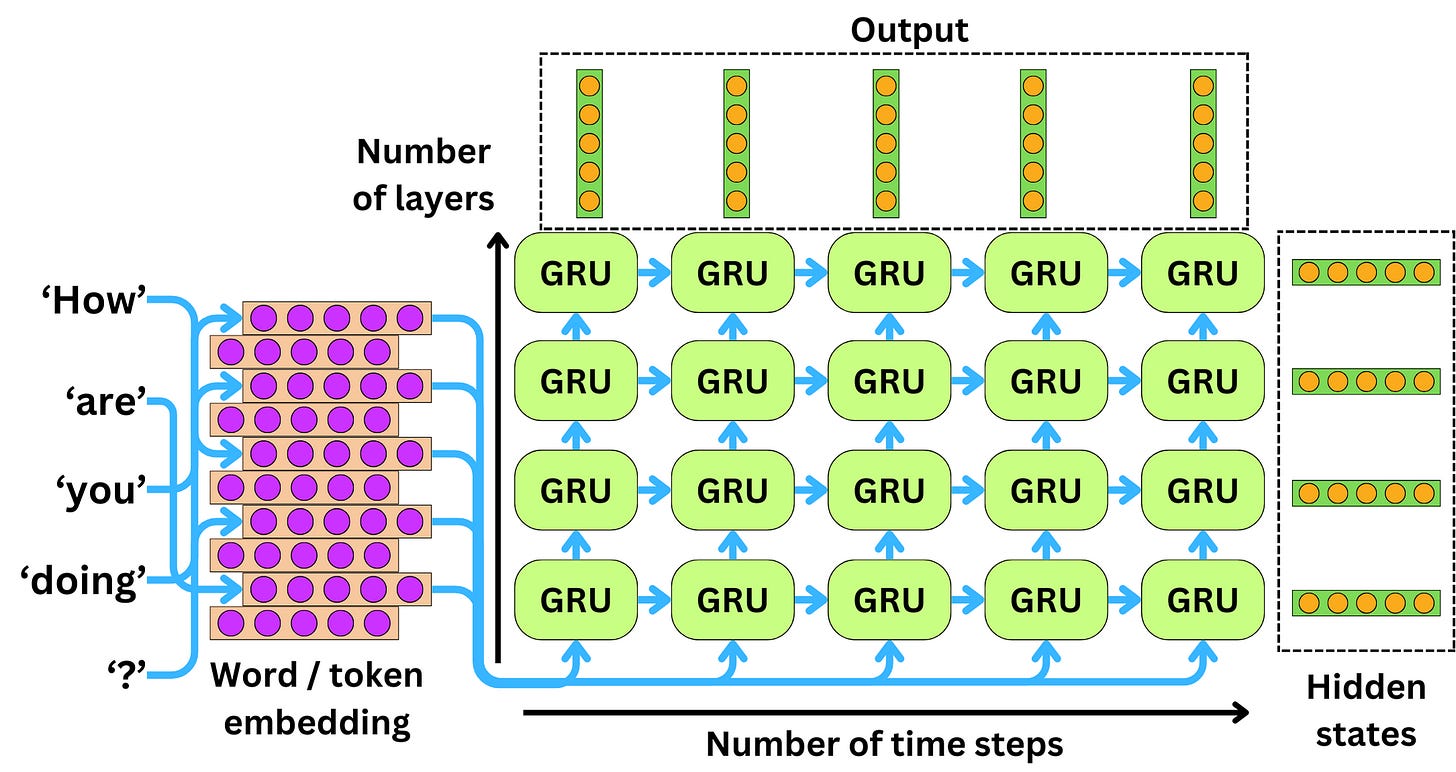

Each of the words in the input sequence corresponds to a time step in the recurring process.

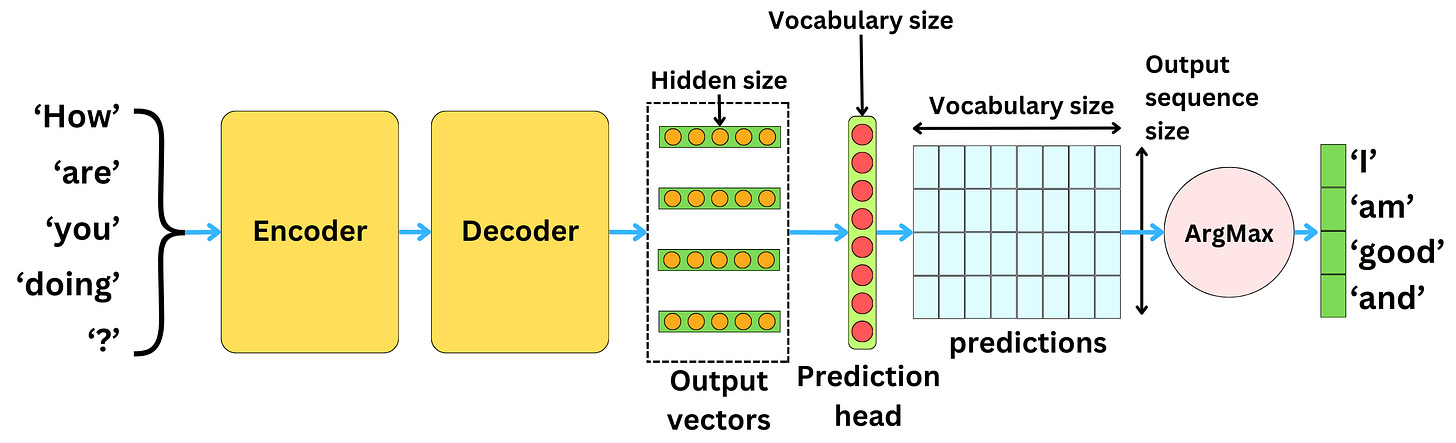

There are two sets of vectors coming out of the recurring layers: the hidden states and the output.

The hidden states coming from the encoder are used as input to the decoder:

The prediction head is mostly a linear layer projecting the decoder output vectors into predictions and choosing the words with the highest probabilities.

17 comments